Showdown: Who is more complex, a human or ChatGPT?

Battle royale between man and machine.

With the recent developments in AI, you may be feeling a little obsolete. Chat-GPT, OpenAI’s latest chatbot, can do a lot of things we once thought of as uniquely human: write college essays, generate programming code, and compose poems.

While some skills remain uniquely ours (writing novels, full-stack coding, flying planes), it seems reasonable to assume that they won’t remain so for long. The implications are unsettling—when nothing remains that we’re uniquely good at, then what good are humans? Are we about to be surpassed?

AI is getting better all the time. GPT-4 (the latest version of ChatGPT) scores in the 88th percentile on the LSAT. Two years ago, it was scoring zero. By simple extrapolation, in two more years it’ll be a boundless superintelligence, for whom the LSAT is a mere footnote in its rise to uncontested global overlord.

Or not. While there’s been a lot of focus on the abilities of AI, less attention has been paid to how it works. And even less to how we work. To get a sense of Chat-GPT’s odds of surpassing—or even matching—us, we need to compare its internal architecture to our own: AI neural network versus the human brain.

We’ll go battle royale style: five rounds, along five dimensions. By the end, we’ll have our answer: who is more complex, human or AI? And what does this tell us about the future?

Round 0: Networks

The human brain is, on one level, a network. Neurons are cells, and synapses are the connections between them.

Neurons are electrical, synapses chemical. Neurons convey information by “firing:” that is, discharging a burst of electricity. When a neuron fires, it releases chemicals into the connected synapse. These chemicals impact neurons on the other end, altering their likelihood of firing.

In short:

- Electrical neurons either fire or don’t

- When neurons fire, connected synapses undergo a chemical change

- Neurons connected to those synapses, in turn, alter their likelihood of firing

In this way, patterns propagate through the network. Over time, synapses adapt their chemical profiles to favor certain patterns over others. We call this learning.

ChatGPT is a neural network, a type of AI modeled after the brain. Its “neurons” are I/O gates, forming the nodes of a network. These nodes are connected by parameters, the AI version of a synapse.

When a node opens (analogous to a neuron firing), it activates connected parameters. Each of these parameters has a “weight,” that determines how much it impacts connected nodes’ or “neurons’” likelihood of opening (or firing).

To sum up:

- Nodes are open or closed

- When they open, the connected parameters activate

- These parameters alter surrounded nodes’ likelihood of opening, in proportion to each parameter’s weight

Like the synapses of a human brain, the parameters of a neural net update their “weights” all the time as they learn.

We see that these two systems are, on one level, structured similarly.

Round 1: Which network is bigger?

First contest: who has the bigger network: human or AI?

GPT-4 is almost certainly the biggest AI neural net in existence, coming in at 1 trillion parameters, according to anonymous sources.

So how do humans compare?

The number of synapses in the human brain is unclear, but 600 trillion represents a solid mid-range estimate. That’s 600x more than GPT-4.

First-round, KO. Human brain beats ChatGPT by a factor of 600.

Round 2: Synapses versus parameters

Round 1 was easy; for the next, we’ll have to go deeper. We’ve determined that synapses and parameters play a similar role in their respective networks—but which is more complex?

A parameter is essentially a number. Each one carries a “weight,” a probabilistic value that, along with other parameter weights, determines which nodes will activate.

The synapses of the human brain are far more complex. A synapse isn’t a single probabilistic value, but a chemical soup, made of neuromodulators. Some are probably familiar to you (dopamine, serotonin, endocannabinoids), others less so (urotensin, substance P, nucleibindin).

Our brain contains over a hundred neuromodulators. They not only interact with neurons but with each other: neuromodulators dynamically alter one another’s behavior, weaving together and apart through branching pathways of the brain.

How do we quantify the difference between a synapse—an open system alive with myriad chemical interactions—and a floating point number? Calculating liberally, we might say that the two can’t be compared: the difference is infinite, a divide-by-zero situation. But let’s give the AI the benefit of the doubt and calculate conservatively: there are over 100 neuromodulators acting on the human brain, though not all 100 act on every synapse.

Let’s estimate (conservatively), 10 neuromodulators per synapse. And let’s also estimate, again conservatively, that their interactions result in another doubling of complexity.

Verdict: Humans win round 2, with an extra 20x complexity.

Round 3: Neuroplasticity

One of the biggest differences between us and AIs is that our brains are plastic. That is, they constantly rewire in response to our experiences.

But then: AI is constantly adapting and evolving too. It’s also dynamic, complex, amazing in its own way, etc.

Yes. But also no.

The AI adapts by altering the weight of each parameter, analogous to the human synapse altering its chemical composition. Our brains not only do this (in a far more sophisticated way, see above). Our brain is constantly altering which neurons are connected and how. That is, synapses are created and destroyed all the time. New research even shows that our brain forms new neurons over the entire span of our lives.

AI neural nets are plastic to a very limited degree: during the training process, quite a few nodes are “pruned,” that is, made inactive. But the connections between nodes are fixed: no new nodes, no new parameters, no parameters changing their positions.

Once again: our brain is constantly rewiring itself. It’s changing which wires are connected. Neural nets do not do this. Their wiring is fixed, though the way signals travel through that wiring changes.

Once again, this degree of freedom elevates the human brain unfathomably higher than a neural network. But estimating conservatively, we can assign this difference a magnitude of roughly 50x.

Verdict: Humans win round 3, with 50x extra complexity.

Round 4: But what about the data?

In a recent New York Times op-ed, Noam Chomsky, in an otherwise reassuringly pro-human piece, suggests a way that AI might beat humans. He points out that a major difference between us and AI is that AI does a little with a lot, and we do a lot with a little. Even if ChatGPT’s “brain” is weaker, it’s working with a larger dataset.

Might this be where AI catches up? Our brain is more powerful, but Chat-GPT has access to a vast internet-based dataset, one we can never match, late nights spent in chatrooms notwithstanding. ChatGPT is an internet expert, and the internet is important—arguably the repository and distillation of all human knowledge.

GPT-3 was trained on a dataset of 570 GB, while GPT-4’s dataset is unknown. Let’s estimate a 100x difference between models, putting GPT-4’s data at about 57 terabytes, assuming the largest LLM dataset in history by a solid order of magnitude.

Impressive to be sure, but we’re not without data ourselves—we navigate the real world, which isn’t easy. How does our dataset compare?

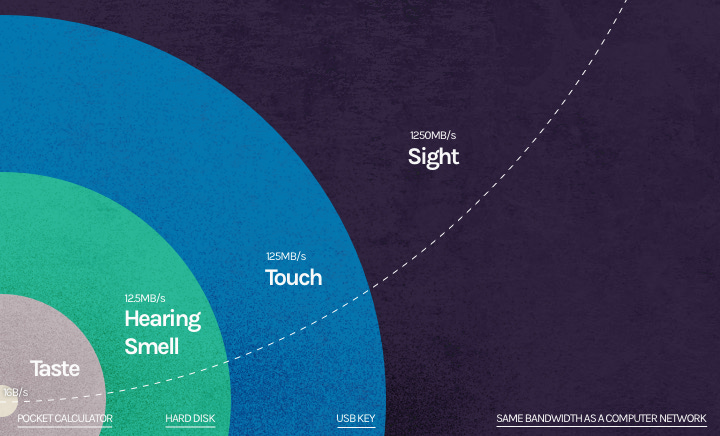

Danish physicist Tor Nørretranders has developed a system for estimating how much data we register through our senses. He estimates that our eyes process 1250 megabytes per second.

If his modeling is accurate, we process more sensory data in twelve hours than GPT-4’s entire training set.

This data isn’t all available for conscious processing, but it doesn’t have to be. It enters into our brain’s “neural network” and impacts it, weaving into our brain’s ability to think, experience, navigate the world.

We can argue that cataloging all of Wikipedia is more important than, say, gazing at a field of flowers (though some would argue the inverse). But in terms of raw data, humans triumph.

If we assume equivalence of data, then a 30-year-old human has processed about 1.3 exabytes through their senses. This beats ChatGPT by a factor of 22,807.

But that’s no fun, since with that math AI might never catch up. Further, there’s reason to assume Chat-GPT’s knowledge is denser than ours (it’s pre-filtered, it’s linguistic rather than sensory). Let’s assume that ChatGPT has the crème de la crème of data, the distilled knowledge of the ages; while ours is second-rate, real-world dross. Let’s say each of Chat-GPT’s bytes is 200 times more valuable than one of ours.

This leaves us with a human advantage of about 100x.

Note the extreme conservativeness of the estimate: we’re approaching bigotry levels of anti-human bias here. But still: we’re dominating AI.

Verdict: Human win, with another 100x of complexity.

Round 5: More to the mind?

When John von Neumann designed the modern-day computer, he was inspired by the most cutting-edge understanding of the human brain—circa 1945. The Von Neumann architecture is modular—with a central processor, and multiple memory stores—and powered by boolean logic, mirroring the neuron. Computers based on this design were wildly impressive, excelling at Cold War code-breaking, simulating nuclear blasts, and beating out humans on some mathematical proofs. Computer intelligence was exploding, and the field was a-buzz with predictions of AI general intelligence in the next few years, tops.

Wrong, very wrong, as we sophisticated 21st-centuryists know. The problem is the architecture: the human brain’s most important aspect isn’t its modularity, but its network. Now, with neural networks, we’ll have general AI in no time, a decade maybe. I mean, look at what it can do already!

As we’ve seen, our networks are trouncing AIs to an embarrassing degree. But it doesn’t necessarily follow that a machine with an equally impressive network will match our capacities. Because that’s not all we are.

On one level, John von Neumann was right—part of what makes the human brain so functional is its modularity and the clarity of the neuron’s boolean logic. On another level, current-day AI yaysayers are right—part of what makes our brains so special is that they’re networks responding dynamically to a complex world.

But the reality is, we’re not even close to understanding how our brains work. It’s a little rich, that in a scientific era when our best guesses at the nature of consciousness are speculation, we’ve got technologists claiming that dead-basic (compared to us) computer networks have achieved sentience.

Our understanding of the brain moves in only one direction: we’re not going to discover it’s always been simpler than we thought. Over the coming decades, we can expect major overhauls in our understanding of the human mind. We’ll likely discover dimensions of complexity in areas we never thought to pay much attention to before.

This round is fairly speculative, so we’ll refrain from assigning points. But this dimension underscores the conservativeness of our estimates: brain networks are spectacular, but they’re only a part of a deeper, subtler, greater whole, the contours of which we’re only beginning to apperceive.

Final verdict?

What a match! Let’s tally the scores and figure out who, of our two contenders, is the true complexity champion:

Round 1: 600x for the humans = # of synapses advantage

Round 2: 20x for the humans = neuropeptide advantage

Round 3: 50x for the humans = neuroplasticity advantage

Round 4: 100x for the humans = data advantage

Round 5: the unknown horizons of the brain: too speculative to tally

600 x 20 x 50 x 100 = 60,000,000

As of now, according to our most conservative estimates, we’re sixty million times more complex than GPT-4.

So how long to General AI?

Moore’s Law is the observation that the space available on a transistor tends to double every two years. We could posit a similar law for neural networks: a doubling of nodes every two years.

However, this approach won’t be that effective: OpenAI’s CEO Sam Altman recently stated that increasing the number of nodes has reached the point of diminishing returns, and other strategies will be necessary to improve Chat-GPT. To approach the complexity of the human brain, neural networks will have to improve along other dimensions: more complex systems for parameter weights, the ability to dynamically create and destroy new nodes.

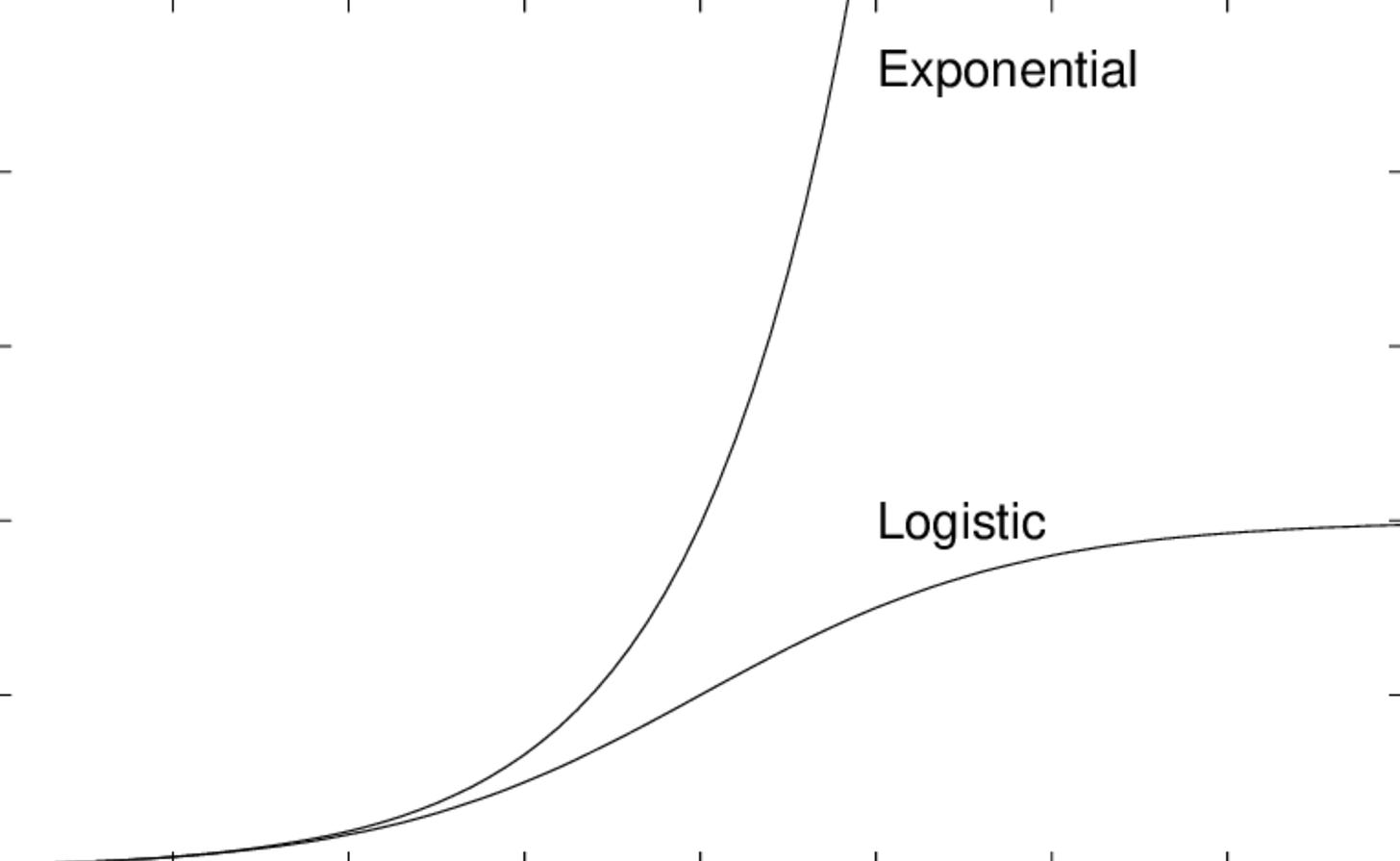

These innovations will likely take some time, as they represent entirely new technologies. Also, Kurtzweilian optimism aside, we have no guarantees of AI following an exponential path of improvement—it could just as easily be logistic.

But, since we’re being generous, let’s assign AI a Moore’s Law level of exponential improvement: a doubling of capacity every 2 years.

For AI to become 60,000,000 times better, we’ll need about 25.8 doubling cycles (2^25.8). With one cycle happening every two years, this will take 51.6 years.

Will AI be able to catch up? Maybe yes, maybe no. A lot can happen in half a century. And at some point, it might reach a threshold where it has “enough” to steal something of our spark. But it’s far more likely that these AIs will reach the point where they can act passably human in some contexts, while still lacking the magic, the depth, the light of consciousness.

As of now, these machines are not beings—not even close. Our ability to believe they are says more about the imaginal capacities of the human mind than anything else.

Arielle is a writer. More of her work can be found here.